5.6 Faster RCNN接口介绍

学习目标

- 目标

- 了解COCO数据集以及API的使用

- 掌握FasterRCNN接口使用

- 应用

- 应用FasterRCNN完成模型的训练以及预测

5.6.1 COCO 数据集介绍

5.6.1.1COCO数据集

- COCO官网:http://cocodataset.org

目标检测领域一个比较有名的数据集 MS COCO (Microsoft COCO: Common Objects in Context) .MSCOCO 数据集是微软构建的一个数据集,其包含 detection, segmentation, keypoints等任务。MSCOCO主要是为了解决detecting non-iconic views of objects(对应常说的detection), contextual reasoning between objects and the precise 2D localization of objects(对应常说的分割问题) 这三种场景下的问题。

与PASCAL COCO数据集相比,COCO中的图片包含了自然图片以及生活中常见的目标图片,背景比较复杂,目标数量比较多,目标尺寸更小,因此COCO数据集上的任务更难,对于检测任务来说,现在衡量一个模型好坏的标准更加倾向于使用COCO数据集上的检测结果。

- 图片大多数来源于生活中,背景更复杂

- 每张图片上的实例目标个数多,平均每张图片7.7个

- 小目标更多

- 评估标准更严格

对比与特点

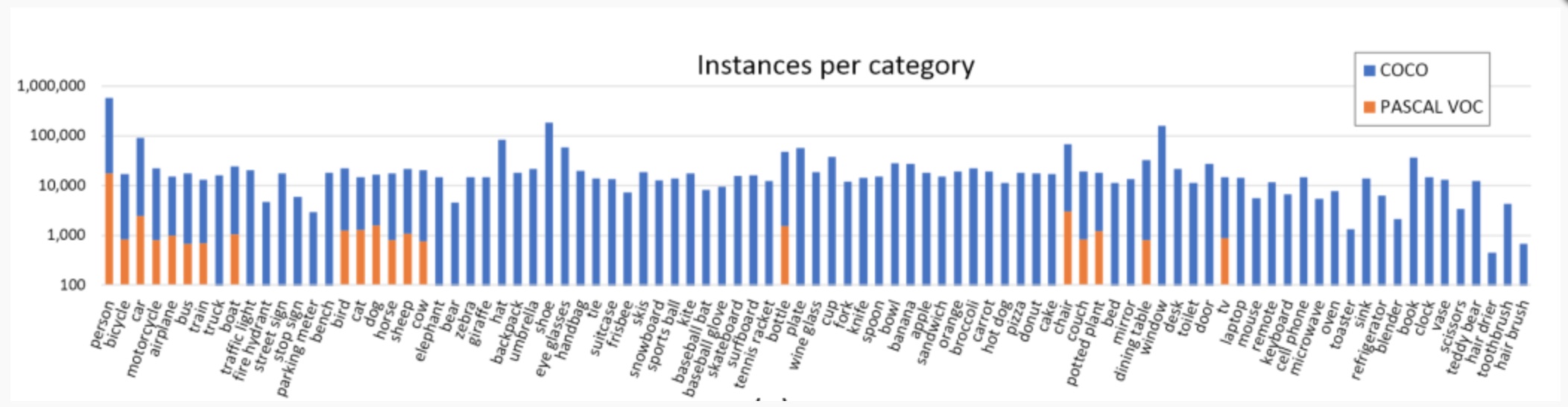

- 1、MSCOCO总共包含91个类别,每个类别的图片数量如下:

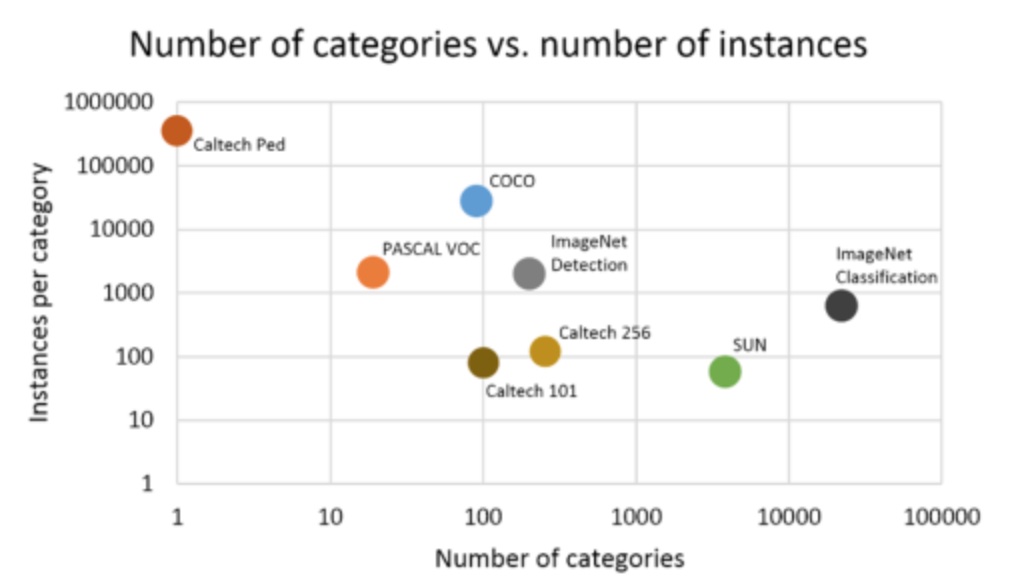

- 2、几个不同数据集的总类别数量,以及每个类别的总实例数量,一个实例就是图片上的一个目标。

COCO数据集的类别总数虽然没有 ImageNet 中用于detection的类别总数多,但是每个类别的实例目标总数要比PASCAL和ImageNet都要多。

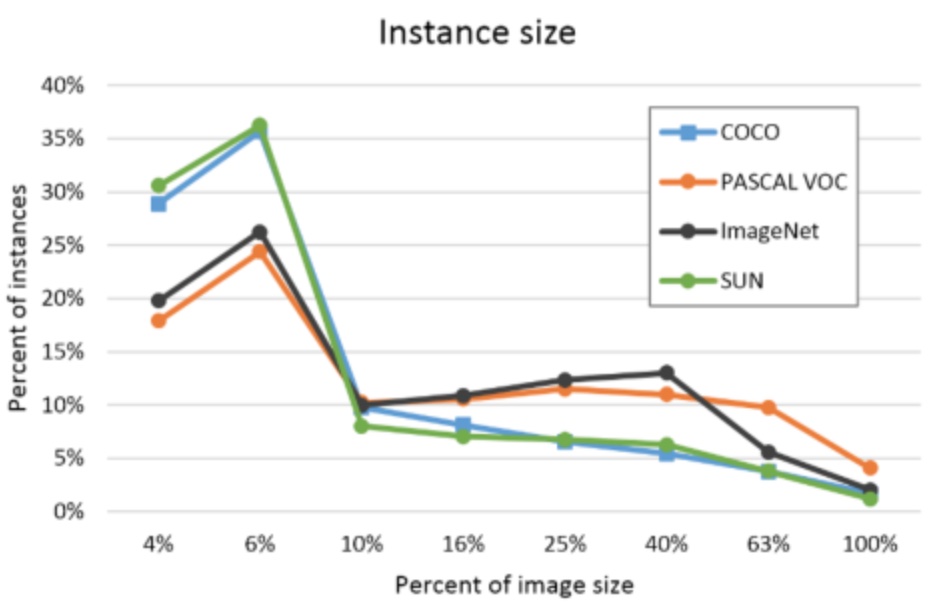

- 3、COCO数据集中的小目标数量占比更多

2014版本包含82,783训练,40,504验证和40,775测试图像(约1/2火车,1/4 val和/ 4测试)。仅2014年train + val数据中,就有近270,000的分段人员和总共886,000的分段对象实例。 2015年累积发行版将总共包含165,482列火车,81,208 val和81,434张测试图像。

5.6.1.2 下载

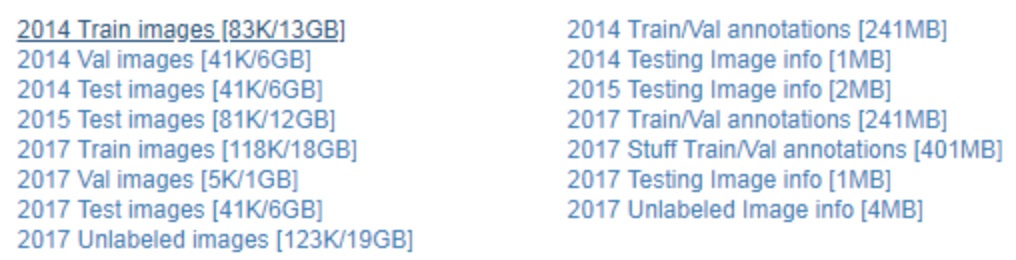

COCO所有的数据集都比较打,下面是下载官方链接。2017年的数据集,训练集18G,验证集750M,测试集6.2G。由于教学原因,后面例子中我们只会做一张图片训练和测试演示,读取过多图片运行会过慢。

2014年的数据在官网是可以下载的,2015年只有test部分,train和val部分的数据没有。另外2017年的数据并没有什么新的图片,只是将数据重新划分,train的数据更多了,如下:

coco2017 数据集下载链接

http://images.cocodataset.org/zips/train2017.zip http://images.cocodataset.org/annotations/annotations_trainval2017.zip

http://images.cocodataset.org/zips/val2017.zip http://images.cocodataset.org/annotations/stuff_annotations_trainval2017.zip

http://images.cocodataset.org/zips/test2017.zip http://images.cocodataset.org/annotations/image_info_test2017.zip

5.6.2 COCO API

- Github 网址:https://github.com/Xinering/cocoapi

- 关于 API 更多的细节在网站: http://mscoco.org/dataset/#download

5.6.2.1 格式

COCO API 提供了 Matlab, Python 和 Lua 的 API 接口. 该 API 接口可以提供完整的图像标签数据的加载, parsing 和可视化。此外,网站还提供了数据相关的文章, 教程等。在使用 COCO 数据库提供的 API 和 demo 之前, 需要首先下载 COCO 的图像和标签数据(类别标志、类别数量区分、像素级的分割等 ):

- 图像数据在到

coco/images/文件夹中 - 标签数据在到

coco/annotations/文件夹中

1、images目录下为训练的图片

2、annotations为图片的标签注释,格式如下

/root/cv_project/image_detection/fasterRCNN/data/coco2017/annotations/instances_train2017.json

/root/cv_project/image_detection/fasterRCNN/data/coco2017/annotations/instances_val2017.json

{

"info": #第一个info信息

{ #数据集信息

"description": "COCO 2014 Dataset",

"url": "http://cocodataset.org",

"version": "1.0",

"year": 2014,

"contributor": "COCO Consortium",

"date_created": "2017/09/01"

},

"images": #第二个图片信息,数组包含了多张图像

[ { #每张图像的具体信息

"license": 5,

"file_name": "COCO_train2014_000000057870.jpg",

"coco_url": "http://images.cocodataset.org/train2014/COCO_train2014_000000057870.jpg",

"height": 480,

"width": 640,

"date_captured": "2013-11-14 16:28:13",

"flickr_url": "http://farm4.staticflickr.com/3153/2970773875_164f0c0b83_z.jpg",

"id": 57870

},

......

...... #此处省略很多图片

{

"license": 4,

"file_name": "COCO_train2014_000000475546.jpg",

"http://images.cocodataset.org/train2014/COCO_train2014_000000475546.jpg",

"height": 375,

"width":500,;、

"date_captured": "2013-11-25 21:20:23",

"flickr_url": "http://farm1.staticflickr.com/167/423175046_6cd9d0205a_z.jpg",

"id": 475546

}], #图像描述结束,下面开始介绍licenses

"licenses":

[ {

"url": "http://creativecommons.org/licenses/by-nc-sa/2.0/",

"id": 1,

"name": "Attribution-NonCommercial-ShareAlike License"

},

.....#此处省略七个license

.....

{

"url": "http://creativecommons.org/licenses/by-nc-nd/2.0/",

"id": 8,

"name": "Attribution-NonCommercial-NoDerivs License"

}],

"annotations":

[ {

"segmentation":[[312.29,562.89,402.25,511.49,400.96,425.38,398.39,372.69,

388.11,332.85,318.71,325.14,295.58,305.86,269.88,314.86,

258.31,337.99,217.19,321.29,182.49,343.13,141.37,348.27,

132.37,358.55,159.36,377.83,116.95,421.53,167.07,499.92,

232.61,560.32,300.72,571.89]],

"area": 54652.9556,

"iscrowd": 0,

"image_id": 480023,

"bbox": [116.95,305.86,285.3,266.03],

"category_id": 58,"id": 86

},

.....#此处省略很多图像的分割标签

.....

"segmentation":[[312.29,562.89,402.25,511.49,400.96,425.38,398.39,372.69,

388.11,332.85,318.71,325.14,295.58,305.86,269.88,314.86,

258.31,337.99,217.19,321.29,182.49,343.13,141.37,348.27,

132.37,358.55,159.36,377.83,116.95,421.53,167.07,499.92,

232.61,560.32,300.72,571.89]],

"area": 54652.9556,

"iscrowd": 0,

"image_id": 480023,

"bbox": [116.95,305.86,285.3,266.03],

"category_id": 58,

"id": 86

},

"categories":#类别信息

[ {

"supercategory": "person",

"id": 1,

"name": "person"

},

.......#此处省略很多图像的类标签

.......

{

"supercategory": "vehicle",

"id": 2,

"name": "bicycle"

},

{

"supercategory": "kitchen",#大类

"id": 50,

"name": "spoon"

}

5.6.2.2 利用 json 文件实例化 COCO API 对象

- 参数

- -

annotation_file=None(str): location of annotation file

- -

需要下载安装

- pip install Cython

- pip install pycocotools

- pip install opencv-python

from pycocotools.coco import COCO

dataDir = './coco'

dataType = 'val2017'

annFile = '{}/annotations/instances_{}.json'.format(dataDir, dataType)

coco = COCO(annFile)

# 若实例化成功, 则会返回:

# loading annotations into memory...

# Done (t=0.81s)

# creating index...

# index created!

- 其他API参考官网

5.6.2.3 COCO接口获取数据代码

下面是通过COCOAPI获取数据集的代码模块介绍

coco.py为coco获取数据类CocoDataSet(类似于之前封装的Sequence类别)

从COCO dataset加载数据集

方法:

- def get_categories(self):Get list of category names.

返回:

def __getitem__(self, idx): '''Load the image and its bboxes for the given index. Args --- idx: the index of images. Returns --- tuple: A tuple containing the following items: image, bboxes, labels.

transforms:

- class ImageTransform(object):处理图片

- 包括大小处理、标准化

- class BboxTransform(object): Preprocess ground truth bboxes.

- rescale bboxes according to image size

- flip bboxes (if needed)

- class ImageTransform(object):处理图片

获取API

class CocoDataSet(object):

def __init__(self,

dataset_dir, # dataset_dir: The root directory of the COCO dataset.

subset, # subset: What to load (train, val).

flip_ratio=0, # flip_ratio: Float. The ratio of flipping an image and its bounding boxes.

pad_mode='fixed', # pad_mode: Which padded method to use (fixed, non-fixed)

mean=(0, 0, 0), # mean: Tuple. Image mean.

std=(1, 1, 1), # Tuple. Image standard deviation.

scale=(1024, 800), # Tuple of two integers.

debug=False):

5.6.1.4 获取2017数据集代码案例

- coco.CocoDataSet获取数据集元组

- data_generator.DataGenerator:打乱数据获取得到一个Generator

- tf.data.Dataset:

- from_generator:从生成器中获取到Dataset类数据集合

import os

import tensorflow as tf

from tensorflow import keras

import numpy as np

from detection.datasets import coco, data_generator

import os

os.environ["TF_CPP_MIN_LOG_LEVEL"] = "2"

train_dataset = coco.CocoDataSet('./data/coco2017', 'train',

flip_ratio=0.5,

pad_mode='fixed',

mean=(123.675, 116.28, 103.53),

std=(1., 1., 1.),

scale=(800, 1216))

train_generator = data_generator.DataGenerator(train_dataset)

train_tf_dataset = tf.data.Dataset.from_generator(

train_generator, (tf.float32, tf.float32, tf.float32, tf.int32))

train_tf_dataset = train_tf_dataset.batch(1).prefetch(100).shuffle(100)

for (batch, inputs) in enumerate(train_tf_dataset):

batch_imgs, batch_metas, batch_bboxes, batch_labels = inputs

print(batch_imgs, batch_metas, batch_bboxes, batch_labels)

打印结果当中包含图片数据,批次元信息,GT位置信息,目标标签

5.6.2 Faster RCNN 训练以及预测

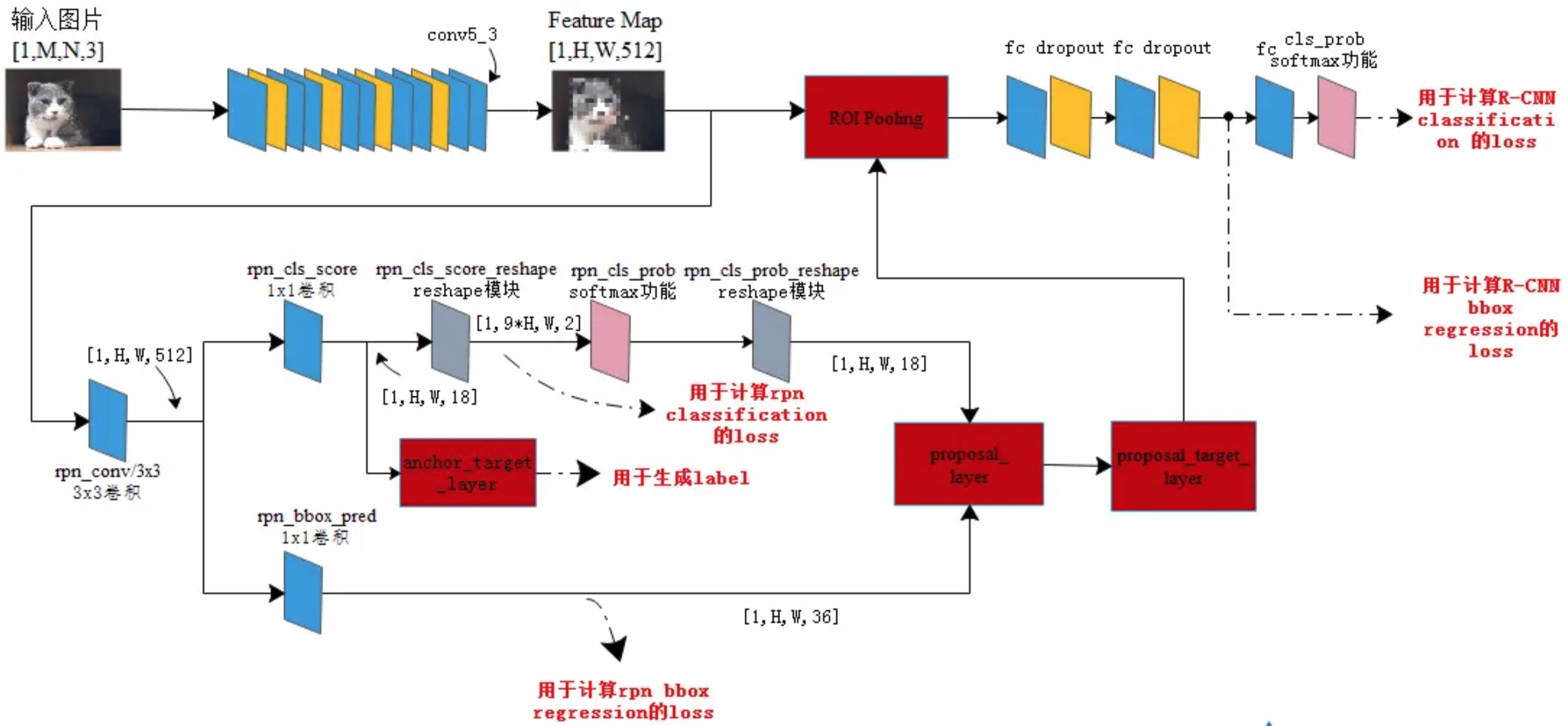

5.6.2.1 Faster RCNN流程图详解

Faster R-CNN整体的流程可以分为三步:

1、提取特征:图片经过预训练的网络(常用ZF和VGG16、ResNet),提取到了图片的特征(feature)

2、Region Proposal network: 利用提取的特征(feature),经过RPN网络,找出一定数量的rois(region of interests)

3、分类与回归:将rois和图像特征features,输入到RoI pooing,负责对由RPN产生的ROI进行分类和微调。对RPN找出的ROI,判断它是否包含目标,并修正框的位置和座标。

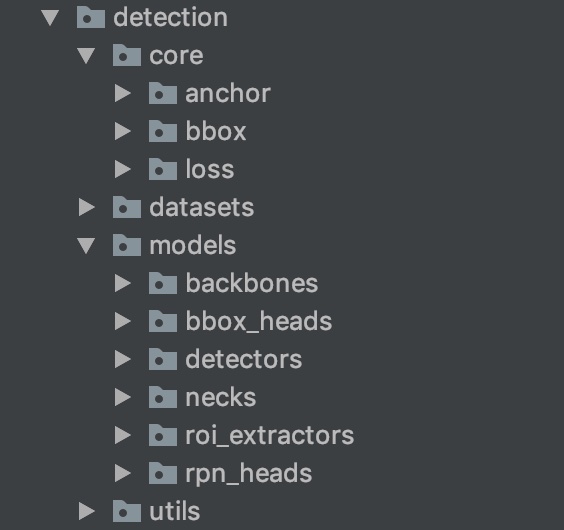

5.6.2.2 开源keras Faster RCNN 模型代码结构

源码组成结构:

- core:核心模块功能,损失、anchor生成

- models:网络实现,CNN主架构(Resnet)、FSRCNN主结构、roi以及rpn

- utils:网络计算工具

5.6.2.3 FaterRCNN 模型训练使用

使用FasterRCNN非常简单,导入from detection.models.detectors import faster_rcnn

model = faster_rcnn.FasterRCNN(num_classes=num_classes)

训练过程

- 1、建立模型

- 2、迭代训练

from detection.models.detectors import faster_rcnn

# 2、建立模型

num_classes = len(train_dataset.get_categories())

model = faster_rcnn.FasterRCNN(num_classes=num_classes)

optimizer = keras.optimizers.SGD(1e-3, momentum=0.9, nesterov=True)

# 3、迭代训练

for epoch in range(1):

loss_history = []

for (batch, inputs) in enumerate(train_tf_dataset):

batch_imgs, batch_metas, batch_bboxes, batch_labels = inputs

with tf.GradientTape() as tape:

rpn_class_loss, rpn_bbox_loss, rcnn_class_loss, rcnn_bbox_loss = model(

(batch_imgs, batch_metas, batch_bboxes, batch_labels), training=True)

loss_value = rpn_class_loss + rpn_bbox_loss + rcnn_class_loss + rcnn_bbox_loss

grads = tape.gradient(loss_value, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

loss_history.append(loss_value.numpy())

if batch % 10 == 0:

print('迭代次数: %d, 批次数: %d, 损失: %f' % (epoch+1, batch+1, np.mean(loss_history)))

这里为了演示,用一张图片进行训练迭代一次,输出结果

迭代次数: 1, 批次数: 1, 损失: 41.552353

5.6.3 代码核心解析

5.6.3.1 模型主体过程

def call(self, inputs, training=True):

"""

:param inputs: [1, 1216, 1216, 3], [1, 11], [1, 14, 4], [1, 14]

:param training:

:return:

"""

if training: # training

imgs, img_metas, gt_boxes, gt_class_ids = inputs

else: # inference

imgs, img_metas = inputs

# 1、主干网络计算,ResNet五层特征输出保留

# [1, 304, 304, 256] => [1, 152, 152, 512]=>[1,76,76,1024]=>[1,38,38,2048]

C2, C3, C4, C5 = self.backbone(imgs,

training=training)

# [1, 304, 304, 256] <= [1, 152, 152, 256]<=[1,76,76,256]<=[1,38,38,256]=>[1,19,19,256]

# FPN进行处理金字塔特征

P2, P3, P4, P5, P6 = self.neck([C2, C3, C4, C5],

training=training)

# 用于RPN计算

rpn_feature_maps = [P2, P3, P4, P5, P6]

# 用于后面的RCNN计算

rcnn_feature_maps = [P2, P3, P4, P5]

# [1, 369303, 2] [1, 369303, 2], [1, 369303, 4], includes all anchors on pyramid level of features

# 2、RPN计算输出2000候选框

# 得到输出结果

rpn_class_logits, rpn_probs, rpn_deltas = self.rpn_head(

rpn_feature_maps, training=training)

# [369303, 4] => [215169, 4], valid => [6000, 4], performance =>[2000, 4], NMS

# 过滤

proposals_list = self.rpn_head.get_proposals(

rpn_probs, rpn_deltas, img_metas)

# 进行区域分配GT,标记正负样本

if training: # get target value for these proposal target label and target delta

rois_list, rcnn_target_matchs_list, rcnn_target_deltas_list = \

self.bbox_target.build_targets(

proposals_list, gt_boxes, gt_class_ids, img_metas)

else:

rois_list = proposals_list

# rois_list only contains coordinates, rcnn_feature_maps save the 5 features data=>[192,7,7,256]

# 对多层特征进行ROIpooling操作

# Implements ROI Pooling on multiple levels of the feature pyramid.

pooled_regions_list = self.roi_align(#

(rois_list, rcnn_feature_maps, img_metas), training=training)

# [192, 81], [192, 81], [192, 81, 4]

# RCNN部分计算输出

rcnn_class_logits_list, rcnn_probs_list, rcnn_deltas_list = \

self.bbox_head(pooled_regions_list, training=training)

# 3、RPN损失以及输出RCNN的损失计算

if training:

rpn_class_loss, rpn_bbox_loss = self.rpn_head.loss(

rpn_class_logits, rpn_deltas, gt_boxes, gt_class_ids, img_metas)

rcnn_class_loss, rcnn_bbox_loss = self.bbox_head.loss(

rcnn_class_logits_list, rcnn_deltas_list,

rcnn_target_matchs_list, rcnn_target_deltas_list)

return [rpn_class_loss, rpn_bbox_loss,

rcnn_class_loss, rcnn_bbox_loss]

else:

detections_list = self.bbox_head.get_bboxes(

rcnn_probs_list, rcnn_deltas_list, rois_list, img_metas)

return detections_list

5.6.3.2 FPN(了解)

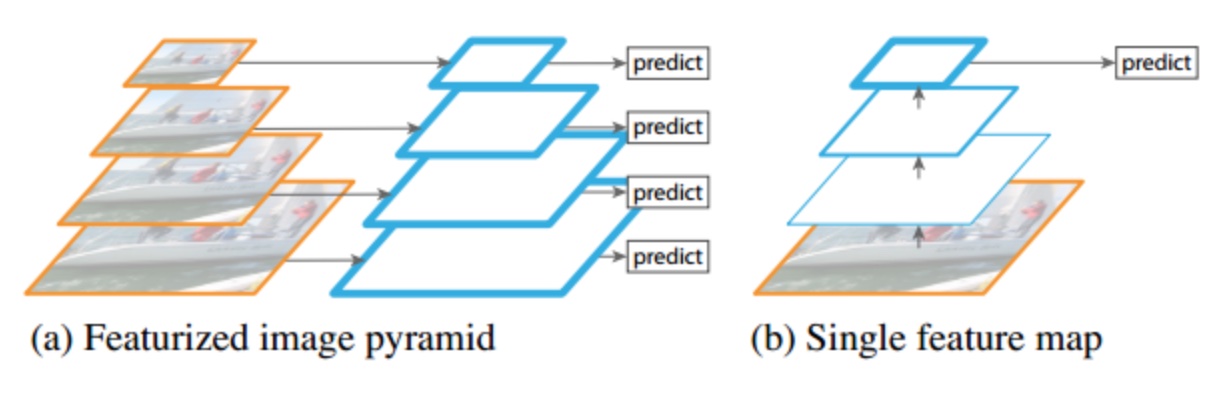

实现论文源码的时候,引入了(Feature Pyramid Networks for Object Detection (FPN))图像金字塔特征(后面要说明的SSD算法也是类似的方案,MaskRCNN中的关键技术之一就是FPN)。图像金字塔或特征金字塔是传统CV方法中常用的技巧。

- 定义:

RPN加入FPN

在金字塔每层的输出feature map上都接上这样的head结构(3×3的卷积 + two sibling 1×1的卷积)。同时,我们不再使用多尺度的anchor box,而是在每个level上分别使用不同大小的anchor box。

对应于特征金字塔的5个level的特征,P2 - P6,anchor box的大小分别是32^2,64^2,128^2,256^2,512^2。同样也有三种不同的比例。总共就有15中类型的anchorbox,比原来多6种。

5.6.3.3 RPN

RPN用来提供ROI的proposal。backbone网络输出的single feature map上接了3×33×3大小的卷积核来实现sliding window的功能,后面接两个1×1的卷积分别用来做objectness的分类和bounding box基于anchor box的回归。我们把最后的classifier和regressor部分叫做head。

整体逻辑

- 1、

def call(self, inputs, training=True):

'''

Args

---

inputs: [batch_size, feat_map_height, feat_map_width, channels]

one level of pyramid feat-maps.

Returns

---

rpn_class_logits: [batch_size, num_anchors, 2]

rpn_probs: [batch_size, num_anchors, 2]

rpn_deltas: [batch_size, num_anchors, 4]

'''

layer_outputs = []

for feat in inputs: # for every anchors feature maps

"""

(1, 304, 304, 256)

(1, 152, 152, 256)

(1, 76, 76, 256)

(1, 38, 38, 256)

(1, 19, 19, 256)

rpn_class_raw: (1, 304, 304, 6)

rpn_class_logits: (1, 277248, 2)

rpn_delta_pred: (1, 304, 304, 12)

rpn_deltas: (1, 277248, 4)

rpn_class_raw: (1, 152, 152, 6)

rpn_class_logits: (1, 69312, 2)

rpn_delta_pred: (1, 152, 152, 12)

rpn_deltas: (1, 69312, 4)

rpn_class_raw: (1, 76, 76, 6)

rpn_class_logits: (1, 17328, 2)

rpn_delta_pred: (1, 76, 76, 12)

rpn_deltas: (1, 17328, 4)

rpn_class_raw: (1, 38, 38, 6)

rpn_class_logits: (1, 4332, 2)

rpn_delta_pred: (1, 38, 38, 12)

rpn_deltas: (1, 4332, 4)

rpn_class_raw: (1, 19, 19, 6)

rpn_class_logits: (1, 1083, 2)

rpn_delta_pred: (1, 19, 19, 12)

rpn_deltas: (1, 1083, 4)

"""

# print(feat.shape)

shared = self.rpn_conv_shared(feat)

shared = tf.nn.relu(shared)

x = self.rpn_class_raw(shared)

# print('rpn_class_raw:', x.shape)

rpn_class_logits = tf.reshape(x, [tf.shape(x)[0], -1, 2])

rpn_probs = tf.nn.softmax(rpn_class_logits)

# print('rpn_class_logits:', rpn_class_logits.shape)

x = self.rpn_delta_pred(shared)

# print('rpn_delta_pred:', x.shape)

rpn_deltas = tf.reshape(x, [tf.shape(x)[0], -1, 4])

# print('rpn_deltas:', rpn_deltas.shape)

layer_outputs.append([rpn_class_logits, rpn_probs, rpn_deltas])

# print(rpn_class_logits.shape, rpn_probs.shape, rpn_deltas.shape)

"""

(1, 277248, 2) (1, 277248, 2) (1, 277248, 4)

(1, 69312, 2) (1, 69312, 2) (1, 69312, 4)

(1, 17328, 2) (1, 17328, 2) (1, 17328, 4)

(1, 4332, 2) (1, 4332, 2) (1, 4332, 4)

(1, 1083, 2) (1, 1083, 2) (1, 1083, 4)

"""

outputs = list(zip(*layer_outputs))

outputs = [tf.concat(list(o), axis=1) for o in outputs]

rpn_class_logits, rpn_probs, rpn_deltas = outputs

# (1, 369303, 2) (1, 369303, 2) (1, 369303, 4)

# print(rpn_class_logits.shape, rpn_probs.shape, rpn_deltas.shape)

return rpn_class_logits, rpn_probs, rpn_deltas

根据输出进行筛选proposals:过滤分数、进行NMS等操作

def get_proposals(self,

rpn_probs,

rpn_deltas,

img_metas,

with_probs=False):

'''

Calculate proposals.

Args

---

rpn_probs: [batch_size, num_anchors, (bg prob, fg prob)]

rpn_deltas: [batch_size, num_anchors, (dy, dx, log(dh), log(dw))]

img_metas: [batch_size, 11]

with_probs: bool.

Returns

---

proposals_list: list of [num_proposals, (y1, x1, y2, x2)] in

normalized coordinates if with_probs is False.

Otherwise, the shape of proposals in proposals_list is

[num_proposals, (y1, x1, y2, x2, score)]

Note that num_proposals is no more than proposal_count. And different

images in one batch may have different num_proposals.

'''

anchors, valid_flags = self.generator.generate_pyramid_anchors(img_metas)

# [369303, 4], [b, 11]

# [b, N, (background prob, foreground prob)], get anchor's foreground prob, [1, 369303]

rpn_probs = rpn_probs[:, :, 1]

# [[1216, 1216]]

pad_shapes = calc_pad_shapes(img_metas)

proposals_list = [

self._get_proposals_single(

rpn_probs[i], rpn_deltas[i], anchors, valid_flags[i], pad_shapes[i], with_probs)

for i in range(img_metas.shape[0])

]

return proposals_list

计算RPN的损失,分类和回归损失:

def loss(self, rpn_class_logits, rpn_deltas, gt_boxes, gt_class_ids, img_metas):

"""

:param rpn_class_logits: [N, 2]

:param rpn_deltas: [N, 4]

:param gt_boxes: [GT_N]

:param gt_class_ids: [GT_N]

:param img_metas: [11]

:return:

"""

# valid_flags indicates anchors located in padded area or not.

anchors, valid_flags = self.generator.generate_pyramid_anchors(img_metas)

# 进行anhor匹配

rpn_target_matchs, rpn_target_deltas = self.anchor_target.build_targets(

anchors, valid_flags, gt_boxes, gt_class_ids)

rpn_class_loss = self.rpn_class_loss(

rpn_target_matchs, rpn_class_logits)

rpn_bbox_loss = self.rpn_bbox_loss(

rpn_target_deltas, rpn_target_matchs, rpn_deltas)

return rpn_class_loss, rpn_bbox_loss

5.6.3.4 测试输出

读取验证集合一张图片,输入模型进行预测输出

def test():

train_dataset = coco.CocoDataSet('./data/coco2017', 'val')

# 获取数据和模型

train_generator = data_generator.DataGenerator(train_dataset)

tf_dataset = tf.data.Dataset.from_generator(train_generator,

(tf.float32, tf.float32, tf.float32, tf.float32))

tf_dataset = tf_dataset.batch(1).prefetch(100).shuffle(100)

num_classes = len(train_dataset.get_categories())

model = faster_rcnn.FasterRCNN(num_classes=num_classes)

for (batch, inputs) in enumerate(tf_dataset):

img, img_meta, _, _ = inputs

print(img, img_meta)

detections_list = model((img, img_meta), training=False)

print(detections_list)

if __name__ == '__main__':

# train()

test()

输出结果

[<tf.Tensor: id=10027, shape=(20, 6), dtype=float32, numpy=

array([[0.000e+00, 0.000e+00, 0.000e+00, 0.000e+00, 3.300e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[0.000e+00, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 7.000e+00, 1.000e+00],

[1.024e+03, 1.024e+03, 1.024e+03, 1.024e+03, 2.700e+01, 1.000e+00]],

dtype=float32)>]

5.6.4 总结

- COCO数据集以及API的使用

- FasterRCNN接口使用

- FasterRCNN完成模型的训练以及预测